Deep Convolutional Neural Network for Semantic Segmentation

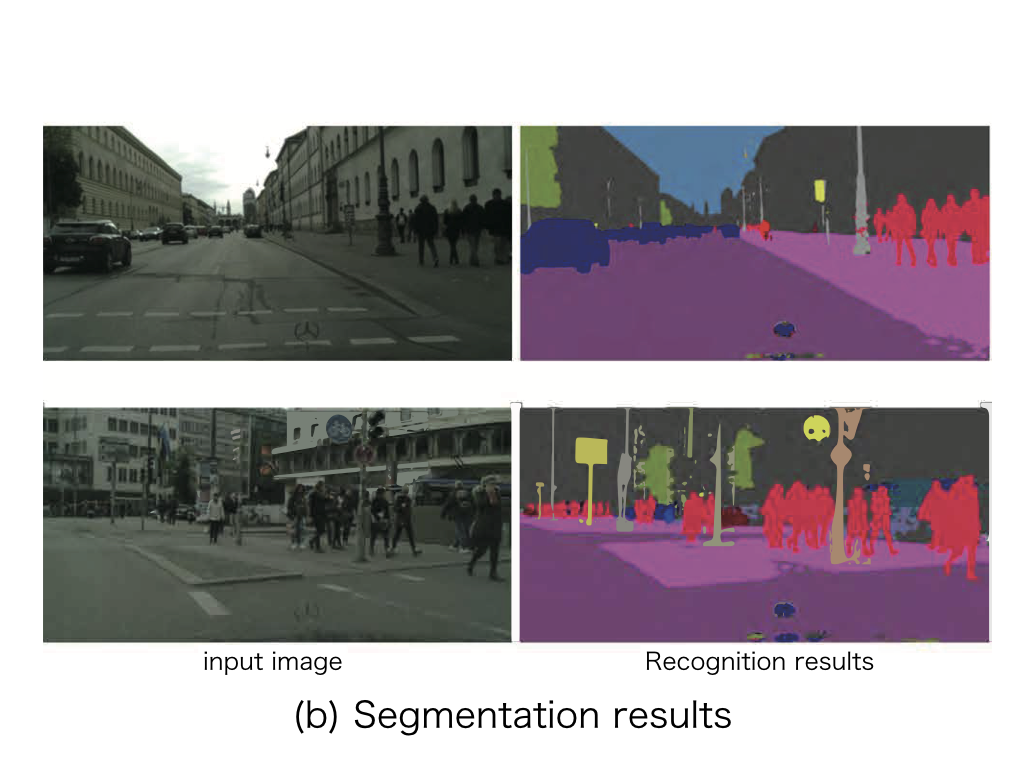

Semantic segmentation for CITYSCAPES DATASET by MNet_MPRG (overlay) from MPRG, Chubu University on Vimeo.

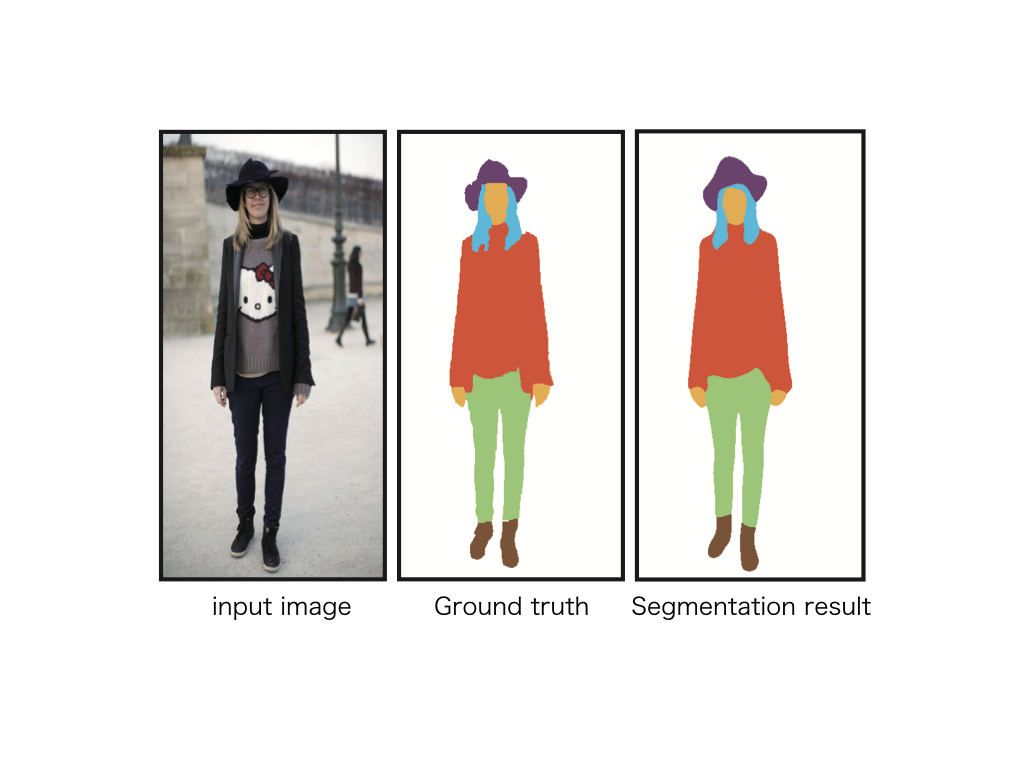

Semantic segmentation, the understanding of scenes from input images, is an important task in computer vision. While object recognition estimates an object category whole image, semantic segmentation estimates an object category for each pixel. Semantic segmentation, in particular, SegNet and Fully Convolutional Network (FCN) are representative semantic segmentation methods.

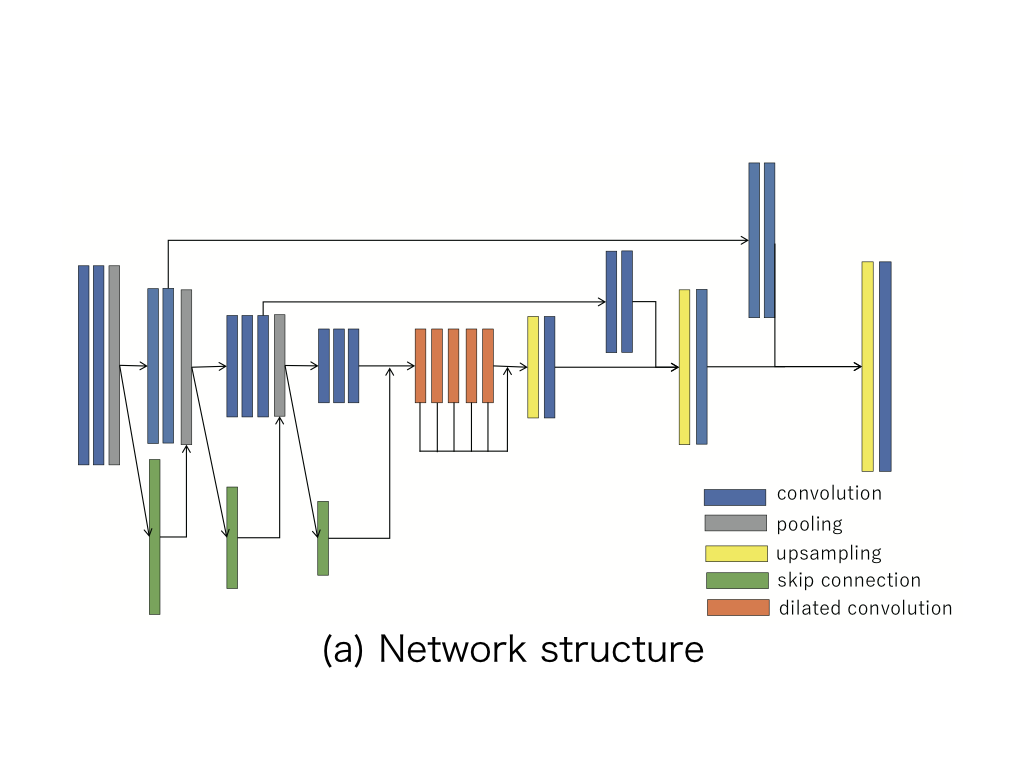

Multiple Dilated Convolution Blocks for Semantic Segmentation

Object scales on vehicle camera varies with the distance between camera and object such as pedestrian or vehicle. Therefore, we propose Multiple Dilated Convolution Blocks, which corresponds to various object scales. Our approach can extract both global and local context. When we evaluated our approach using the Cityscape Dataset benchmark, we outperformed conventionally semantic segmentation methods such as FCN and SegNet.

2D-QRNN for semantic segmentation

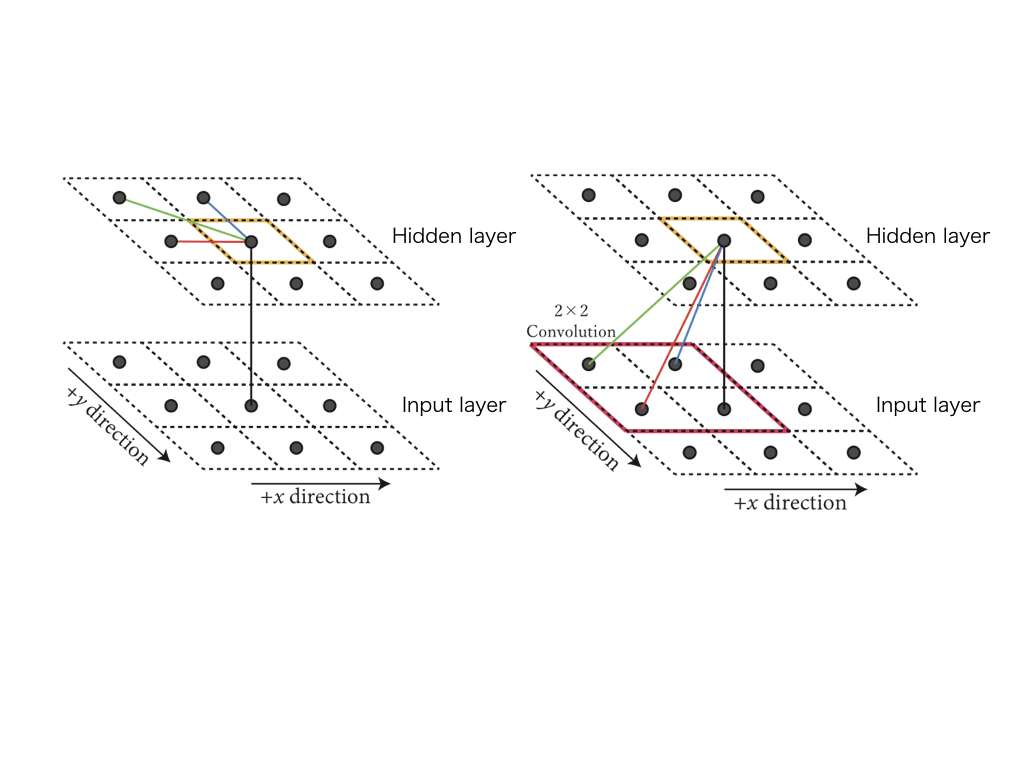

Directed Acyclic Graph Recurrent Neural Network(DAG-RNNs) can improve accuracy semantic segmentation by extracting context as global space. A DAG-RNNs comprises a 2D RNN installed in a Deep Convolutional Neural Networks(DCNN). However, RNN calculations are time-consuming since the hidden layer of the RNN cannot perform parallel calculation operations. Therefore, we achieved high accuracy and faster performance semantic segmentation by proposing 2D-Quasi-Recurrent Neural Network which is extended Q-RNN to 2D.