Team C^2M : APC RGB-D+PointCloud Dataset 2015

The Amazon Picking Challenge (APC) is a competition for automation of product distribution posed as a problem in which a robot must recognize a specified item among items displayed on a shelf and pick the item. The evaluation Dataset used by Team C^2M for the APC2015 is published on this page. The Dataset includes three types of data: RGB images acquired by the Mitsubishi MELFA-3D Vision, a depth image, and a 3-D point cloud. For quantitative evaluation, a ground truth image labeled with the specified color is provided for each item.

Overview of the Dataset

The 25 types of items used in the APC2015 have various shapes and other attributes, including items packaged in boxes and vinyl, non-rigid stuffed toys, etc. The data formats are listed below.

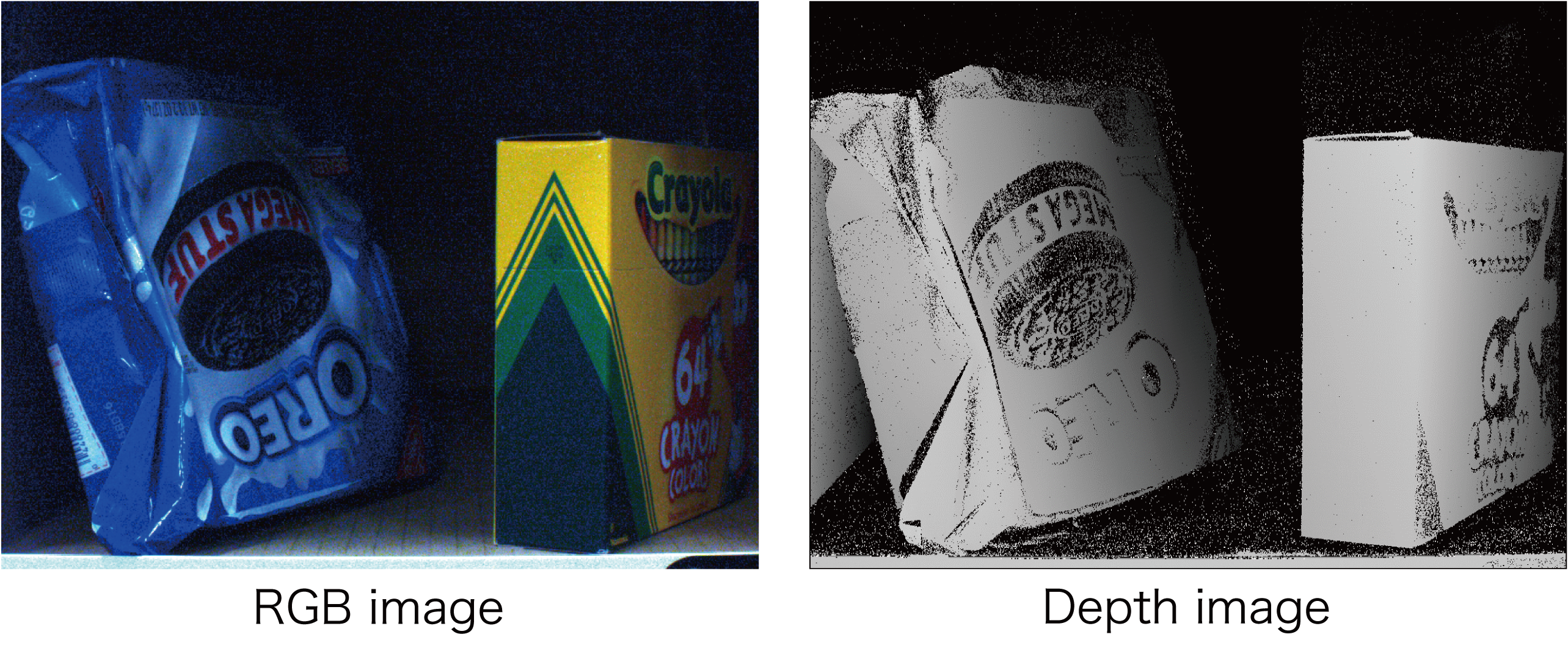

RGB images (color_img.bmp)

・Size 1280 x 960 pixels

Depth image (depth.bmp)

・Size: 1280 x 960 pixels

・Represent distance as values between 0 and 255 in a grayscale image

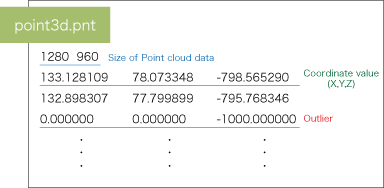

Point cloud data (point3d.pnt)

・Each data point includes an X coordinate, Y coordinate, and Z coordinate

・A total of 1280 x 960 coordinate data points are included

・Locations that cannot be obtained by coordinates are assigned the value of -1000

Labeling data

Labeling data is created for each scene. For each item that is present in one scene, a region corresponding to the item region is distinguished by painting with the specified color. The background region is painted black.

A number corresponding to each item of the labeling data is stored the “APC_image.png” file and the color code for each item is stored in the “APC_item_ColorCode.xlsx” file.

・Ground truth image (label.bmp)

・An image that represents the correspondence of the APC Dataset item numbers (apc_item.png)

・An Excel file that represents the correspondence of segmentation colors and item numbers (item_correspondence_table.xlsx)

| Item ID | R | G | B | Color | Item Image |

| BG | 0 | 0 | 0 |  |

|

| Item 1 | 128 | 0 | 0 |  |

|

| Item 2 | 255 | 0 | 0 |  |

|

| Item 3 | 0 | 128 | 0 |  |

|

| Item 4 | 128 | 128 | 0 |  |

|

| Item 5 | 255 | 128 | 0 |  |

|

| Item 6 | 0 | 255 | 0 |  |

|

| Item 7 | 128 | 255 | 0 |  |

|

| Item 8 | 255 | 255 | 0 |  |

|

| Item 9 | 0 | 0 | 128 |  |

|

| Item 10 | 128 | 0 | 128 |  |

|

| Item 11 | 255 | 0 | 128 |  |

|

| Item 12 | 0 | 128 | 128 |  |

| Item ID | R | G | B | Color | Item Image |

| Item 13 | 128 | 128 | 128 |  |

|

| Item 14 | 255 | 128 | 128 |  |

|

| Item 15 | 0 | 255 | 128 |  |

|

| Item 16 | 128 | 255 | 128 |  |

|

| Item 17 | 255 | 255 | 128 |  |

|

| Item 18 | 0 | 0 | 255 |  |

|

| Item 19 | 128 | 0 | 255 |  |

|

| Item 20 | 255 | 0 | 255 |  |

|

| Item 21 | 0 | 128 | 255 |  |

|

| Item 22 | 128 | 128 | 255 |  |

|

| Item 23 | 255 | 128 | 255 |  |

|

| Item 24 | 0 | 255 | 255 |  |

|

| Item 25 | 128 | 255 | 255 |  |

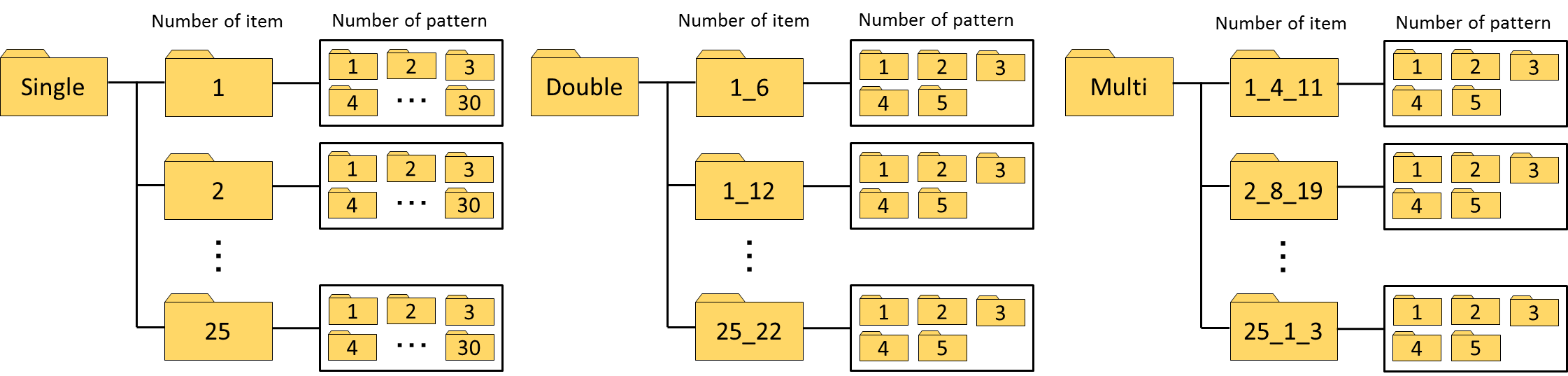

Dataset structure

The Dataset was constructed with a Single-Item Bin that contains items of one type, a Double-Item Bin that contains items of two types, and a Multi-Item Bin that contains items of three or more types, assuming three classes of scenes.

・Single-Item Bin: 30 scenes for each item (total of 750 scenes)

・Double-Item Bin: Five patterns for each of 75 combinations of two items (total of 375 scenes)

・Multi-Item Bin: Five scenes for each of 35 combinations of three items and five combinations of four items (total of 200 scenes)

The folder structure for the three classes of scenes is described below.

The folder numbers correspond to the item numbers assigned by our team. For example, [Double] [1_6] indicates that item number 1 and item number 6 are present on one shelf.

Evaluation method

For robot picking, estimation of the 6D pose of the item is ideal. However, this Dataset includes non-rigid-body items and other items for which 6D pose estimation can be difficult. We therefore adopted two evaluation methods: evaluation by coordinate estimation and evaluation by segmentation region.

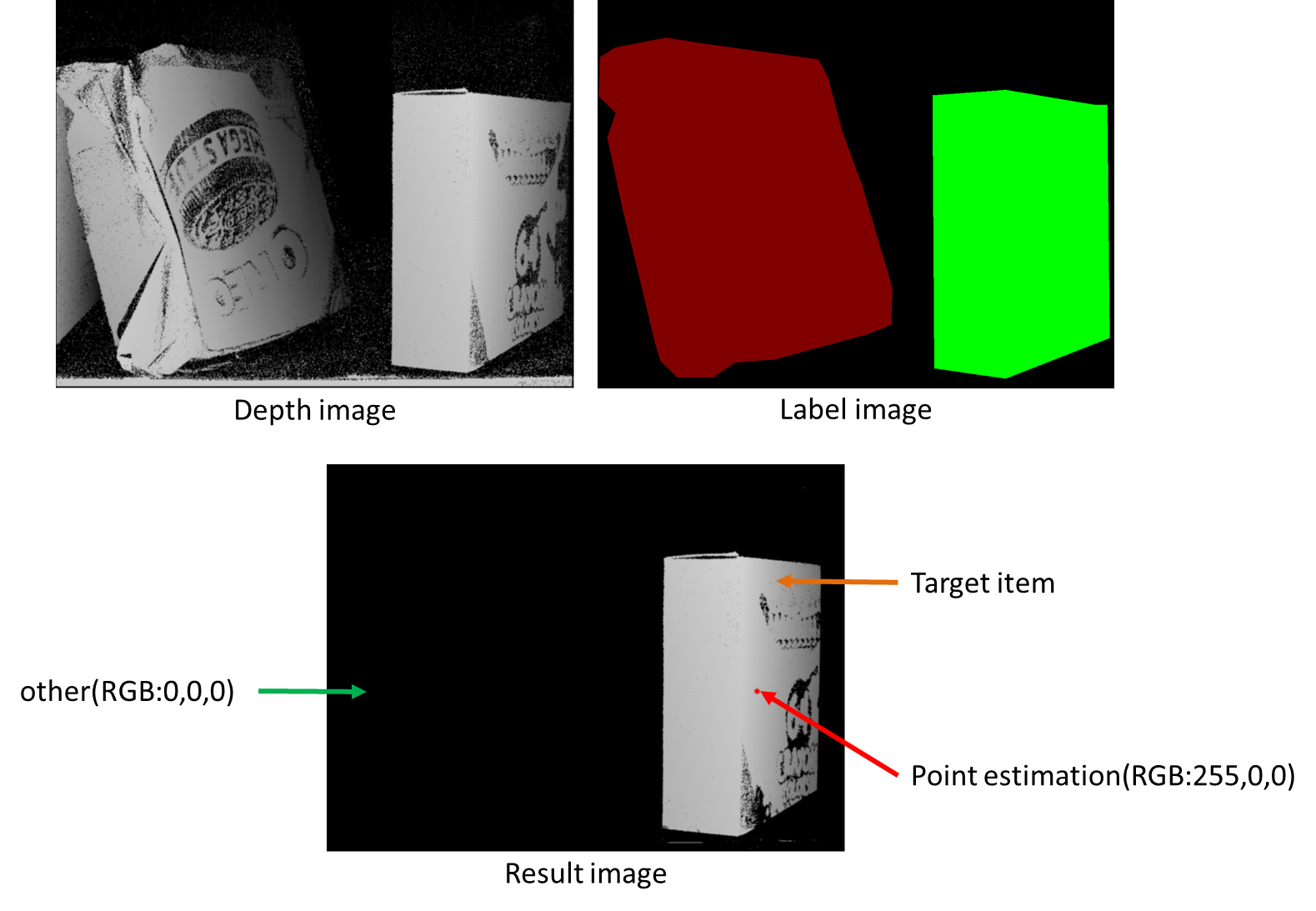

- Evaluation by estimated coordinates

In evaluation by estimated item coordinates, recognition is evaluated as successful if the estimated coordinates of the item to be recognized are within the segmentation region of the labeling image and evaluated as failed otherwise.

- Evaluation by segmentation region

In evaluation by segmentation, evaluation is performed by comparing the depth image segmentation results and the labeling data. For the segmentation evaluation, the segmentation results and the labeling data are compared in one-pixel units and the detection rate and precision are calculated for all of the pixels in one image. From the calculated values, an F value is obtained. The segmentation is evaluated as successful if the F value is equal to or higher than a threshold (default: 0.5); otherwise, it is evaluated as failed.

Download

・Single-Item Bin Dataset: Team C^2M : APC RGB-D+PointCloud Dataset 2015(Single-Item Bin) (~4.5GB)

・Double-Item Bin Dataset: Team C^2M : APC RGB-D+PointCloud Dataset 2015(Dpuble-Item Bin) (~3.2GB)

・Multi-Item Bin Dataset: Team C^2M : APC RGB-D+PointCloud Dataset 2015(Multi-Item Bin) (~1.6GB)

・Full Dataset: Team C^2M : APC RGB-D+PointCloud Dataset 2015(FULL) (~9.3GB)

・Evaluation software: APC_evaluation_Web.zip (~13KB)

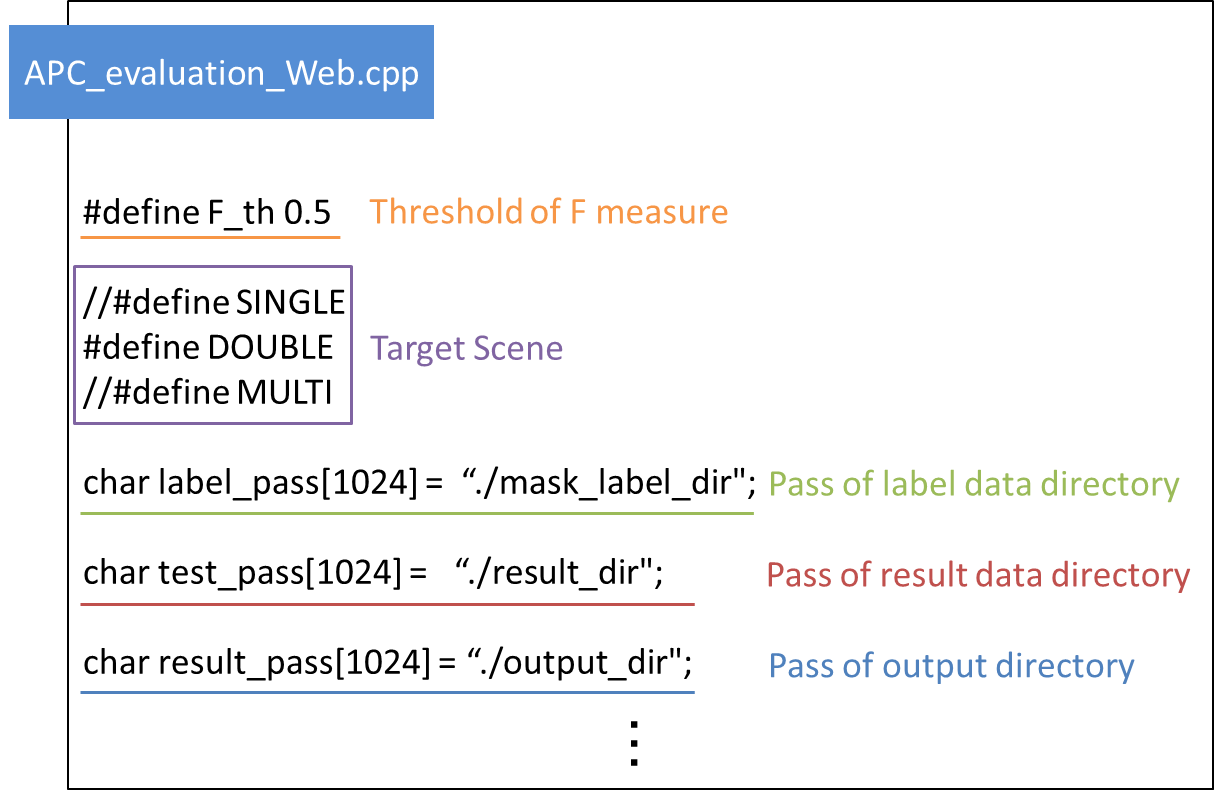

How the evaluation software is used

The development environment for the evaluation software is described below. Because OpenCV 1.x.x and OpenCV 3.x.x may not operate normally at times, so please take that into consideration.

・OpenCV 2.4.10

The evaluation software requires the data listed below.

・Evaluation software

・Result image “result.bmp”

– Image size: 1280 x 960 pixels

– Estimated coordinates marked in red (RGB value: 255,0,0)

– The region that does not correspond to the target item in the depth image is painted black (RGB value: 0,0,0)

・Labeling data (for the target scenes of the Dataset)

When using the evaluation software, the following settings are required.

・Scenes to be evaluated (Single-Item Bin, Double-Item Bin, Multi-Item Bin)

・Paths to the data required for evaluation (correct label images, images to be evaluated, evaluation result output)

・F value threshold for segmentation evaluation (default: 0.5)

A “result.bmp” file is required. That file holds the image that is the result of segmentation of the depth image from the evaluation Dataset. The estimated position of the object in the segmented image must be marked in red in advance (RGB value: 255,0,0). The data structure of the evaluation results requires the same folder structure as the evaluation Dataset. For example, the [Double]-[1_6]-[1] folder must contain a [1] folder for output of the recognition results for the first item and a [6] folder for output of the recognition results for sixth item, etc.

When the program is run, evaluation of each scene by the method described above is begun, and the number of successes for each item is output to a text file (.txt format) when all of the processing is completed.

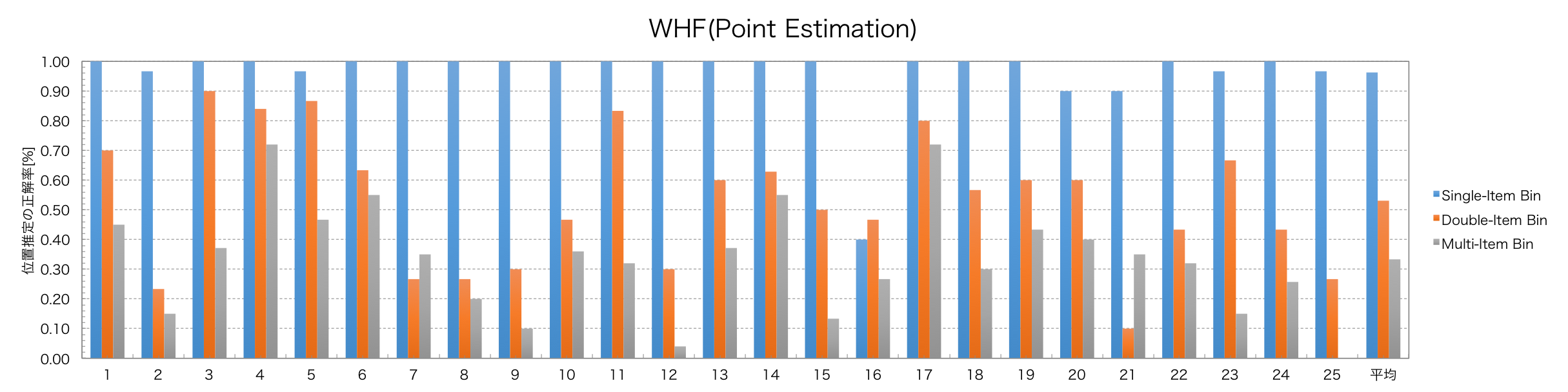

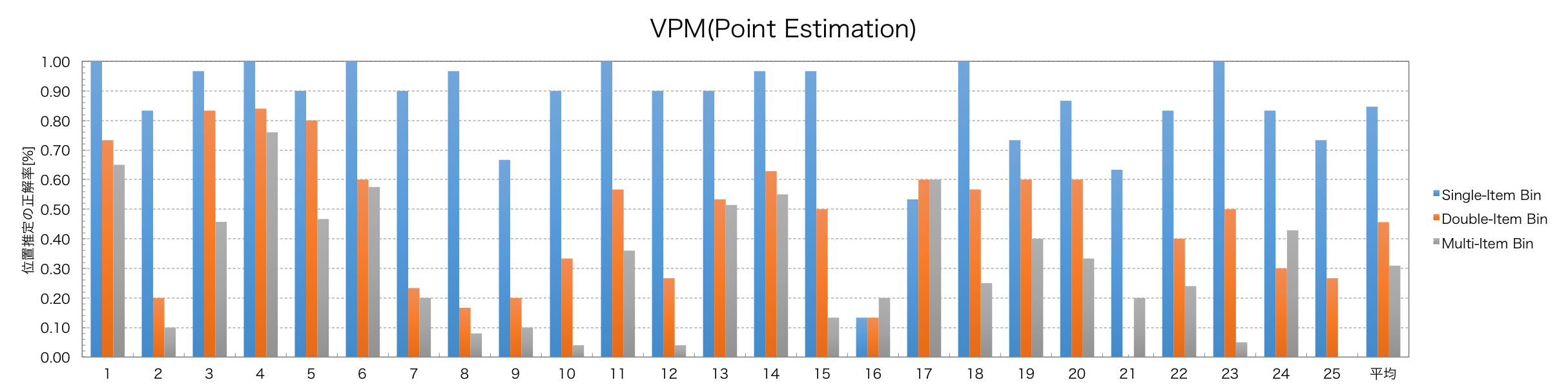

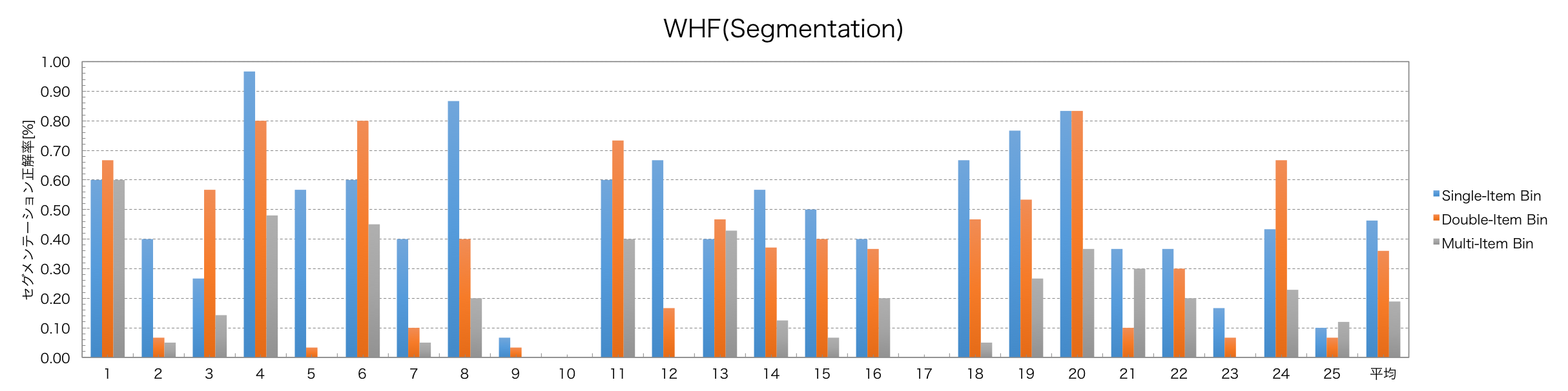

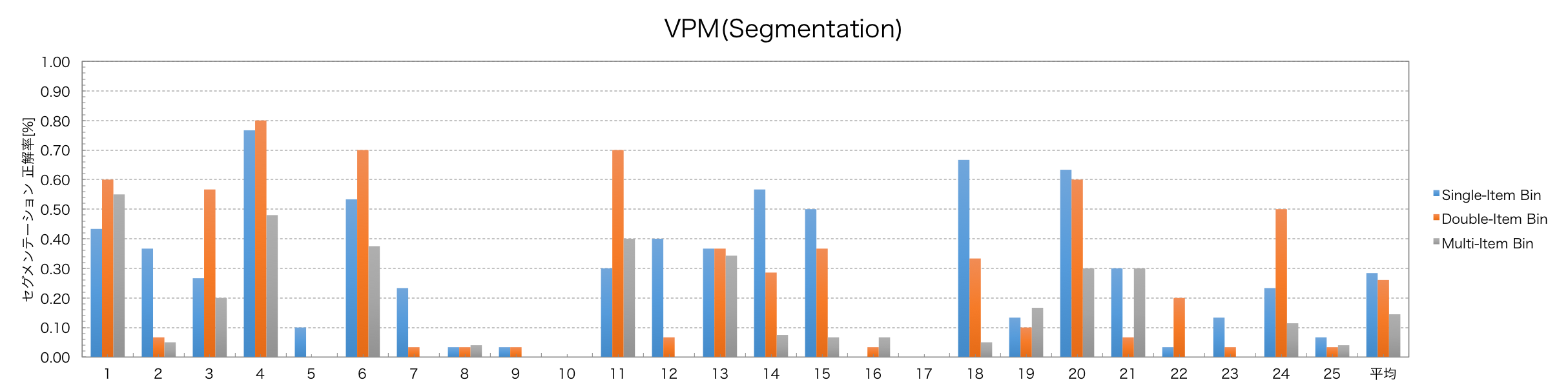

Evaluation of the Team C^2M recognition method

We used the software described above to evaluate the estimated coordinates and segmentation for the target items.

・Evaluation results: APC_eva.xlsx

Evaluation method

・Weighted Hough Forest(WHF)[Link]: Recognition error is reduced by using weighting in the sample during training to learn the unique shape of the object to be recognized.

・Vector pair mathing(VPM)[Link]: Use of vector pairs for which stable observation is possible from among the vector pairs formed from three points in three-dimensional space enables highly accurate recognition.

- Evaluation by estimated coordinates

- Evaluation by segmentation region

*We would like to continue adding new evaluation results, and welcome all contributions of evaluation results for this Dataset.

Disclaimer

Although due care was taken in preparing this information on the published program and data, we make no guarantee concerning that information. The Machine Perception and Robotics Group, which authored the program, the materials, and the Web site, accept no responsibility for any damages that may be caused by their use or by browsing this site. We also accept no responsibility for any damages that may arise from program revisions or redistribution. With agreement to these disclaimers, the published program may be used for research purposes only and under the responsibility of the user. For communication concerning commercial use, please contact the following person.

Communication:

Hironobu Fujiyoshi: hf@cs.chubu.ac.jp